FormatImporter

|

Collect

1. Overview

FormatImporter is used to import external data in common formats to Sensors Analytics. It currently supports reading CSV files, Nginx logs, MySQL databases, Oracle databases, and JSON data that conforms to the data format.

2. Usage

2.1. Operating Environment

This tool supports running in a Linux environment and also supports running in a Windows environment. It requires Python 3.4 or higher. Additionally, if you need to import data from MySQL or Oracle databases, make sure that the relevant client programs are installed on your machine.

When deploying in a Windows environment, pay attention to file encoding issues. Please refer to section 4.8 of this document for specific precautions.

2.2. Download the tool

Click this link to download. The script is a compressed package that can be used after extraction.

2.3. Get the Data Receiving URL

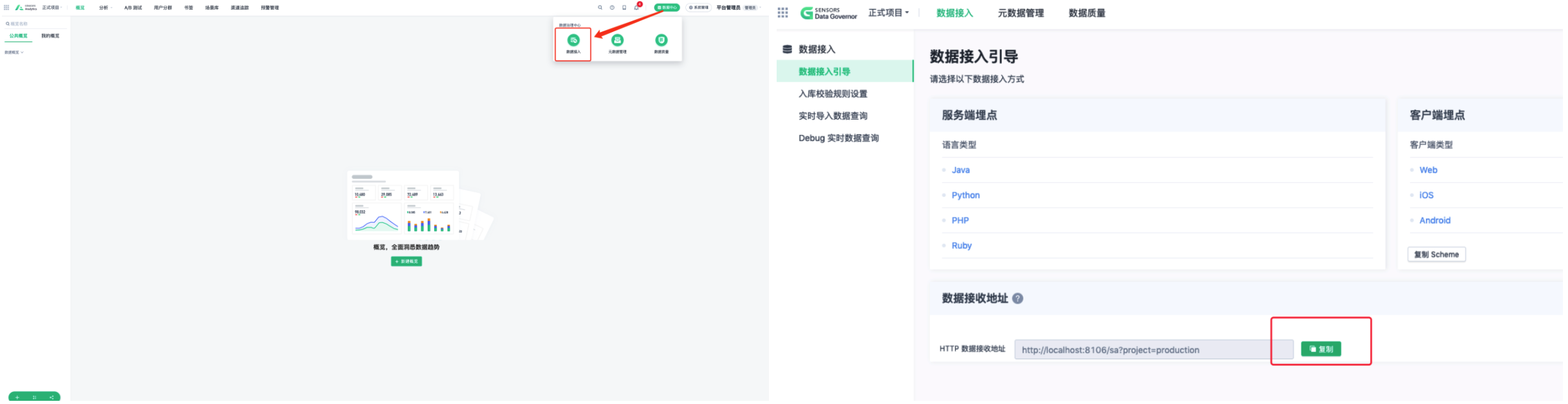

First, obtain the Data Receiving URL from the home page of Sensors Analytics. The method of obtaining it is shown in the figure below (slightly different methods for different versions):

①,

②,

2.4. Import data in CSV format

2.4.1. Import events

Assume there is a CSV file describing the following user behaviors (refer to the examples/events.csv file in the code package):

user_id,action,time,item_id,item_name,item_cate bug29,view,2018-05-12 13:01:11,13245,男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲,男装 bug29,buy,2018-05-12 13:05:03,13245,男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲,男装 小武,view,2018-05-13 10:20:32,23421,New Order Technique 2CD豪华版 欧版行货 全新未拆,音像 菠菜,view,2018-05-13 20:42:53,3442,NUK安抚奶嘴宝宝防胀气安慰奶嘴乳胶迪士尼安睡型,母婴Import this data into the Sensors Analytics system, using the user_id column as the user ID, the time column as the event time, the action column as the event name, and import only item_id and item_name as event properties:

python3 format_importer.py csv_event \ --url 'http://localhost:8106/sa?project=xxx' \ --distinct_id_from 'user_id' \ --is_login \ # 标示 distinct_id 为登录 ID,若 distinct_id 为匿名 ID,则去掉 --is_login --timestamp_from 'time' \ --event_from 'action' \ --filename './examples/events.csv' \ --property_list 'item_id,item_name' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debugNote: --url is set to the Data Receiving URL. The specific method of obtaining it can be found in section 2.3 of this document or in the Data Access Guide.

2.4.2. User Attributes

Assume there is a CSV file describing the following user attributes (refer to examples/profiles.csv in the code package):

user_id,gender,is_member,score bug29,男,true,131 小武,女,false,Import these data into the SensData system, using the user_id column as the user ID:

python3 format_importer.py csv_profile \ --url 'http://localhost:8106/sa?project=xxx' \ --distinct_id_from 'user_id' \ --is_login \ # 标示 distinct_id 为登录 ID,若 distinct_id 为匿名 ID,则去掉 --is_login --filename './examples/profiles.csv' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.4.3. Import Item Data

Assume there is a CSV file describing the following item data (refer to examples/item.csv in the code package):

item_type,item_id,item_name,item_cate,action view,13245,男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲,男装,买买买 buy,13245,男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲,男装,缺货 view,23421,New Order Technique 2CD豪华版 欧版行货 全新未拆,音像,缺货 view,3442,NUK安抚奶嘴宝宝防胀气安慰奶嘴乳胶迪士尼安睡型,母婴,买买Import these data into the SensData system, specifying the item_type and item_id field values of the item data respectively:

python3 format_importer.py csv_item \ --url 'http://localhost:8106/sa?project=xxx' \ --item_type 'item_type' \ --item_id 'item_id' \ --property_list 'item_name,item_cate,action' \ --filename './examples/item.csv' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.4.4. Import User Associations

Note: Importing user associations requires v1.13.5 or above.

Assume there is a CSV file describing the following user associations (refer to examples/signup.csv in the code package):

user_id,device_id 小武,ac0eadfb-cd5d-44b6-8a21-079862773c11 菠菜,2903f1d4-e20d-4866-8614-66d9101a3bd3 bug29,0c0c93f5-c747-4c1a-acfc-e75279720da1Import these data into the SensData system, specifying the login_id_from and anonymous_id_from parameters as the login ID and anonymous ID of the user associations respectively:

python3 format_importer.py csv_signup \ --url 'http://localhost:8106/sa?project=xxx' \ --login_id_from 'user_id' \ --anonymous_id_from 'device_id' \ --filename './examples/signup.csv' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.5. Import Nginx Logs

2.5.1. Import Events

Assume there are Nginx logs describing the following user behaviors (refer to examples/events.log in the code package):

123.4.5.6 - [12/May/2018:13:01:11 +0800] "GET /item?id=13245&action=view&cate=%e7%94%b7%e8%a3%85" 200 1127 "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.85 Safari/537.36" "http://fake_web.com/login.html" "男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲" "bug29" 123.4.5.6 - [12/May/2018:13:05:03 +0800] "GET /item?id=13245&action=buy&cate=%e7%94%b7%e8%a3%85" 200 1127 "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.85 Safari/537.36" "http://fake_web.com/login.html" "男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲" "bug29" 123.4.5.7 - [13/May/2018:10:20:32 +0800] "GET /item?id=23421&action=view&cate=%e9%9f%b3%e5%83%8f" 200 1127 "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36" "http://www.baidu.com?q=abc" "New Order Technique 2CD豪华版 欧版行货 全新未拆" "小武" 123.8.5.7 - [13/May/2018:20:42:53 +0800] "GET /item?id=&action=view&cate=%e6%af%8d%e5%a9%b4" 200 1127 "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36" "http://www.baidu.com?q=abc" "NUK安抚奶嘴宝宝防胀气安慰奶嘴乳胶迪士尼安睡型" "菠菜"The corresponding format configuration for Nginx is as follows:

log_format compression '$remote_addr [$time_local] "$request" $status $bytes_sent "$http_user_agent" "$http_referer" "$title" "$user_id"'; access_log /data/nginx_log/access.log compression;Import these data into the SensData system, using $user_id as the user ID, $time_local as the event occurrence time, the action value of the $request parameters after parsing as the event name, and only import two event attributes: the id value of the parsed $request parameters as item_id, and the custom variable $title as item_name:

python3 format_importer.py nginx_event \ --url 'http://localhost:8106/sa?project=xxx' \ --distinct_id_from 'user_id' \ --is_login \ # 标示 distinct_id 为登录 ID,若 distinct_id 为匿名 ID,则去掉 --is_login --timestamp_from 'time_local' \ --timestamp_format '%d/%b/%Y:%H:%M:%S %z' \ --event_from '__request_param_action' \ --filename './examples/events.log' \ --log_format '$remote_addr [$time_local] "$request" $status $bytes_sent "$http_user_agent" "$http_referer" "$title" "$user_id"' \ --property_list '__request_param_id,title' \ --property_list_cnames 'item_id,item_name' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.5.2. Import User Attributes

Assume there are Nginx logs describing the following user attributes (refer to examples/profiles.log in the code package):

123.4.5.6 - [12/May/2018:13:01:11 +0800] "POST /profile?user=bug29&is_member=true" 200 1127 "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.85 Safari/537.36" "http://fake_web.com/login.html" "男" "131" 123.4.5.7 - [13/May/2018:10:20:32 +0800] "POST /profile?user=%e5%b0%8f%e6%ad%a6&is_member=false" 200 1127 "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36" "http://www.baidu.com?q=abc" "女" ""The corresponding format configuration for Nginx is as follows:

log_format compression '$remote_addr [$time_local] "$request" $status $bytes_sent "$http_user_agent" "$http_referer" "$gender" "$score"'; access_log /data/nginx_log/access.log compression;Import these data into the SensData system, using the user value of the parsed $request parameters as the user ID, and import three user attributes: the custom variables $gender and $score, as well as the is_member value of the parsed $request parameters:

python3 format_importer.py nginx_profile \ --url 'http://localhost:8106/sa?project=xxx' \ --distinct_id_from '__request_param_user' \ --is_login \ # 标示 distinct_id 为登录 ID,若 distinct_id 为匿名 ID,则去掉--is_login --filename './examples/profiles.log' \ --log_format '$remote_addr [$time_local] "$request" $status $bytes_sent "$http_user_agent" "$http_referer" "$gender" "$score"' \ --property_list 'gender,score,__request_param_is_member' \ --property_list_cnames 'gender,score,is_member' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉--debug2.5.3. Import Item Attributes

Assume that Nginx logs describe the following item data (refer to examples/item.log):

123.4.5.6 - [12/May/2018:13:01:11 +0800] "GET /item?id=13245&action=view&cate=%e7%94%b7%e8%a3%85" 200 1127 "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.85 Safari/537.36" "http://fake_web.com/login.html" "男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲" "bug29" 123.4.5.6 - [12/May/2018:13:05:03 +0800] "GET /item?id=13245&action=buy&cate=%e7%94%b7%e8%a3%85" 200 1127 "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.85 Safari/537.36" "http://fake_web.com/login.html" "男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲" "bug29" 123.4.5.7 - [13/May/2018:10:20:32 +0800] "GET /item?id=23421&action=view&cate=%e9%9f%b3%e5%83%8f" 200 1127 "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36" "http://www.baidu.com?q=abc" "New Order Technique 2CD豪华版 欧版行货 全新未拆" "小武" 123.8.5.7 - [13/May/2018:20:42:53 +0800] "GET /item?id=&action=view&cate=%e6%af%8d%e5%a9%b4" 200 1127 "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36" "http://www.baidu.com?q=abc" "NUK安抚奶嘴宝宝防胀气安慰奶嘴乳胶迪士尼安睡型" "菠菜"The format of the corresponding Nginx configuration is as follows:

log_format compression '$remote_addr [$time_local] "$request" $status $bytes_sent "$http_user_agent" "$http_referer" "$title" "$user_id"'; access_log /data/nginx_log/access.log compression;Import these data into the Sensory System, and configure --item_type and --item_id to specify the item_type and item_id field values of the item data respectively:

python3 format_importer.py nginx_item \ --url 'http://localhost:8106/sa?project=xxx' \ --item_id 'user_id' \ --item_type '__request_param_action' \ --filename './examples/item.log' \ --log_format '$remote_addr [$time_local] "$request" $status $bytes_sent "$http_user_agent" "$http_referer" "$title" "$user_id"' \ --property_list '__request_param_id,title' \ --property_list_cnames '$gender,$score' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉--debug2.5.4. Import User Associations

Note: Importing user associations requires version v1.13.5 or above

Assume that Nginx logs describe the following user associations (refer to examples/signup.log):

123.4.5.6 - [12/May/2018:13:01:11 +0800] "POST /login?user_id=bug29&device_id=0c0c93f5-c747-4c1a-acfc-e75279720da1" 200 1127 "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.85 Safari/537.36" "http://fake_web.com/login.html" "男" "131" 123.4.5.7 - [13/May/2018:10:20:32 +0800] "POST /login?user_id=%e5%b0%8f%e6%ad%a6&device_id=ac0eadfb-cd5d-44b6-8a21-079862773c11" 200 1127 "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36" "http://www.baidu.com?q=abc" "女" ""Import these data into the Sensory System, and configure --login_id_from and --anonymous_id_from to specify the login ID and anonymous ID of the user associations respectively:

python3 format_importer.py nginx_signup \ --url 'http://localhost:8106/sa?project=xxx' \ --login_id_from '__request_param_user_id' \ --anonymous_id_from '__request_param_device_id' \ --filename './examples/signup.log' \ --log_format '$remote_addr [$time_local] "$request" $status $bytes_sent "$http_user_agent" "$http_referer" "$gender" "$score"' \ --property_list '__request_param_user_id,__request_param_device_id' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉--debug2.6. Import data from MySQL

Note that the use of MySQL import requires the installation of related libraries. Please run the following command to install pymysql:

python3 -m pip install PyMySQL --upgrade2.6.1. Import events

Assume that a MySQL database describes the following user actions (refer to examples/events.sql):

drop table if exists events; create table events ( user_id varchar(100), action varchar(100), time timestamp, item_id int, item_name text, item_cate varchar(100)); insert into events values('bug29', 'view', '2018-05-12 13:01:11', 13245, '男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲', '男装'); insert into events values('bug29', 'buy', '2018-05-12 13:05:03', 13245, '男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲', '男装'); insert into events values('小武', 'view', '2018-05-13 10:20:32', 23421, 'New Order Technique 2CD豪华版 欧版行货 全新未拆', '音像'); insert into events values('菠菜', 'view', '2018-05-13 20:42:53', 3442, 'NUK安抚奶嘴宝宝防胀气安慰奶嘴乳胶迪士尼安睡型', '母婴');Import these data into the Sensory System, using the user_id column as the user ID, the time column as the time the event occurred, the action column as the event name, and importing only the item_id and item_name as event properties. The ORDER BY at the end is mainly to ensure that the sequence of multi-queries remains consistent. In the event of a partial import failure, you can use --skip_cnt configuration to skip the number of successfully imported rows:

python3 format_importer.py mysql_event \ --url 'http://localhost:8106/sa?project=xxx' \ --distinct_id_from 'user_id' \ --is_login \ # 标示 distinct_id 为登录 ID,若 distinct_id 为匿名 ID,则去掉 --is_login --timestamp_from 'time' \ --event_from 'action' \ --user 'root' \ --password 'pass' \ --host 'localhost' \ --port 3307 \ --db 'test_db' \ --sql 'select user_id, action, time, item_id, item_name from events order by time;' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.6.2. Import user properties

Assume that a MySQL database describes the following user attributes (refer to examples/profiles.sql):

drop table if exists profiles; create table profiles ( user_id varchar(100), gender varchar(20), is_member bool, score int); insert into profiles values('bug29', '男', true, 131); insert into profiles values('小武', '女', false, null);Import these data into the Sensory System, using the user_id column as the user ID, and using the remaining columns as the user properties. The ORDER BY at the end is mainly to ensure that the sequence of multi-queries remains consistent. In the event of a partial import failure, you can use the --skip_cnt configuration to skip the number of successfully imported rows:

python3 format_importer.py mysql_profile \ --url 'http://localhost:8106/sa?project=xxx' \ --distinct_id_from 'user_id' \ --is_login \ # 标示 distinct_id 为登录 ID,若 distinct_id 为匿名 ID,则去掉 --is_login --user 'root' \ --password 'pass' \ --host 'localhost' \ --port 3307 \ --db 'test_db' \ --sql 'select user_id, gender, is_member, score from profiles order by user_id;' \ --bool_property_list 'is_member' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.6.3. Import item properties

Assume that a MySQL database describes the following item data (refer to examples/events.sql):

drop table if exists events; create table events ( user_id varchar(100), action varchar(100), time timestamp, item_id int, item_name text, item_cate varchar(100)); insert into events values('bug29', 'view', '2018-05-12 13:01:11', 13245, '男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲', '男装'); insert into events values('bug29', 'buy', '2018-05-12 13:05:03', 13245, '男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲', '男装'); insert into events values('小武', 'view', '2018-05-13 10:20:32', 23421, 'New Order Technique 2CD豪华版 欧版行货 全新未拆', '音像'); insert into events values('菠菜', 'view', '2018-05-13 20:42:53', 3442, 'NUK安抚奶嘴宝宝防胀气安慰奶嘴乳胶迪士尼安睡型', '母婴');Import these data into the Sensory System, using the item_id column in the table as the item_id of the item data, and the action column as the item_type. The ORDER BY at the end is mainly to ensure that the sequence of multi-queries remains consistent. In the event of a partial import failure, you can use the --skip_cnt configuration to skip the number of successfully imported rows:

format_importer.py mysql_item \ --url 'http://localhost:8106/sa?project=xxx' \ --item_type 'action' \ --item_id 'item_id' \ --user 'root' \ --password 'root1234' \ --host 'localhost' \ --port 3306 \ --db 'sa_item' \ --sql 'select user_id, gender, is_member, score from events order by user_id' \ --debug \ # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.6.4. Import User Associations

Note: Importing user associations requires version v1.13.5 or above

Assuming that there is a MySQL database describing the following user relationships (refer to examples/signup.sql):

drop table if exists users; create table users ( user_id varchar(100), device_id varchar(100)); insert into users values('bug29', '0c0c93f5-c747-4c1a-acfc-e75279720da1'); insert into users values('小武', 'ac0eadfb-cd5d-44b6-8a21-079862773c11'); insert into users values('菠菜', '2903f1d4-e20d-4866-8614-66d9101a3bd3');Import these data into the Sensors Analytics system, using --login_id_from and --anonymous_id_from to specify the login ID and anonymous ID of the user relationships. The final ORDER BY is mainly to ensure consistent order of multiple data queries. If importing fails halfway, you can skip importing successful rows by configuring --skip_cnt:

python3 format_importer.py mysql_signup \ --url 'http://localhost:8106/sa?project=xxx' \ --login_id_from 'user_id' \ --anonymous_id_from 'device_id' \ --user 'root' \ --password 'root1234' \ --host 'localhost' \ --port 3306 \ --db 'sa_user' \ --sql 'select user_id, device_id from users order by user_id;' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.7. Import the JSON formatted logs

Users can also write logs to files, with each line containing data that conforms to the [g16]data format[/g16]. Assuming there are logs that describe the following events, user attributes, and item data (refer to examples/json_data.json).

{"type":"track","time":1526101271000,"distinct_id":"bug29","properties":{"item_id":13245.0,"item_name":"\u7537\u58eb\u62a4\u8033\u4fdd\u6696\u9e2d\u820c\u76ae\u5e3d\u5e73\u9876\u516b\u89d2\u5e3d\u5934\u5c42\u725b\u76ae\u5e3d\u5b50\u65f6\u5c1a\u4f11\u95f2"},"event":"view","time_free":true} {"type":"track","time":1526101503000,"distinct_id":"bug29","properties":{"item_id":13245.0,"item_name":"\u7537\u58eb\u62a4\u8033\u4fdd\u6696\u9e2d\u820c\u76ae\u5e3d\u5e73\u9876\u516b\u89d2\u5e3d\u5934\u5c42\u725b\u76ae\u5e3d\u5b50\u65f6\u5c1a\u4f11\u95f2"},"event":"buy","time_free":true} {"type":"track","time":1526178032000,"distinct_id":"\u5c0f\u6b66","properties":{"item_id":23421.0,"item_name":"New Order Technique 2CD\u8c6a\u534e\u7248 \u6b27\u7248\u884c\u8d27 \u5168\u65b0\u672a\u62c6"}, "event":"view","time_free":true} {"type":"track","time":1526215373000,"distinct_id":"\u83e0\u83dc","properties":{"item_id":3442.0,"item_name":"NUK\u5b89\u629a\u5976\u5634\u5b9d\u5b9d\u9632\u80c0\u6c14\u5b89\u6170\u5976\u5634\u4e73\u80f6\u8fea\u58eb\u5c3c\u5b89\u7761\u578b"},"event":"view","time_free":true} {"type":"profile_set","time":1526263297951,"distinct_id":"bug29","properties":{"gender":"\u7537","is_member":true,"score":131.0},"time_free":true} {"type":"profile_set","time":1526263297951,"distinct_id":"\u5c0f\u6b66","properties":{"gender":"\u5973","is_member":false},"time_free":true} {"type":"item_set","properties":{"name":"yuejz","OrderPaid":12.1},"item_id":"item_id","time":1566022866941,"item_type":"item_type"} {"type":"item_set","properties":{"name":"yuejz"},"item_id":"item_id","time":1566022866941,"item_type":"item_type"} {"type":"item_set","time":1566023226152,"properties":{"OrderTime":"2019-07-01 12:02:36","OrderPaid":12.1},"item_id":"item_id","item_type":"item_type"} {"type":"item_delete","item_id":"item_id","properties":{"OrderPaid":12.1,"OrderTime":"2019-07-01 12:02:36"},"item_type":"item_type"}Note: If the distinct_id in the event or user attribute data is the login ID, you need to add the "$is_login_id": true property in the properties to indicate.

Import these data into the Sensors Analytics system:

python3 format_importer.py json \ --url 'http://localhost:8106/sa?project=xxx' \ --path './examples/json_data.json' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.8. Import data from Oracle

Note that importing data from Oracle requires installing the relevant library. Please run the following command to install cx_Oracle, and make sure the Oracle client package is installed on the machine:

python3 -m pip install cx_Oracle --upgrade2.8.1. Import events

Assuming that an Oracle database describes the following user behaviors (refer to examples/events.plsql):

drop table if exists events; create table events ( user_id varchar(100), action varchar(100), time timestamp, item_id int, item_name text, item_cate varchar(100)); insert into events values('bug29', 'view', '2018-05-12 13:01:11', 13245, '男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲', '男装'); insert into events values('bug29', 'buy', '2018-05-12 13:05:03', 13245, '男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲', '男装'); insert into events values('小武', 'view', '2018-05-13 10:20:32', 23421, 'New Order Technique 2CD豪华版 欧版行货 全新未拆', '音像'); insert into events values('菠菜', 'view', '2018-05-13 20:42:53', 3442, 'NUK安抚奶嘴宝宝防胀气安慰奶嘴乳胶迪士尼安睡型', '母婴');Import these data into the Sensors Analytics system, using the user_id column as the user ID, the time column as the time the event occurred, and the action column as the event name. Only import item_id and item_name as event properties. The final ORDER BY is mainly to ensure consistent order of multiple data queries. If importing fails halfway, you can skip importing successful rows by configuring --skip_cnt:

python3 format_importer.py oracle_event \ --url 'http://localhost:8106/sa?project=xxx' \ --distinct_id_from 'user_id' \ --is_login \ # 标示 distinct_id 为登录 ID,若 distinct_id 为匿名 ID,则去掉 --is_login --timestamp_from 'time' \ --event_from 'action' \ --user 'root' \ --password 'pass' \ --dsn '127.0.0.1/orcl' \ --sql 'select USER_ID as "user_id", ACTION as "action", TIME as "time", ITEM_ID as "item_id", ITEM_NAME as "item_name" from events order by time' \ --case_sensitive true --debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debug2.8.2. Import user attributes

Assuming that an Oracle database describes the following user attributes (refer to examples/profiles.plsql).

drop table if exists profiles; create table profiles ( user_id varchar(100), gender varchar(20), is_member bool, score int); insert into profiles values('bug29', '男', true, 131); insert into profiles values('小武', '女', false, null);Import these data into the Sensors Analytics system, using the user_id column as the user ID, and all remaining columns as user attributes. The final ORDER BY is mainly to ensure consistent order of multiple data queries. If importing fails halfway, you can skip importing successful rows by configuring --skip_cnt:

python3 format_importer.py oracle_profile \ --url 'http://localhost:8106/sa?project=xxx' \ --distinct_id_from 'user_id' \ --is_login \ # 标示 distinct_id 为登录 ID,若 distinct_id 为匿名 ID,则去掉--is_login --user 'root' \ --password 'pass' \ --dsn '127.0.0.1/orcl \ --sql 'select USER_ID as "user_id", GENDER as "gender", IS_MEMBER as "is_member", SCORE as "score" from profiles order by user_id' \ --case_sensitive true --bool_property_list 'is_member' \ --debug # 校验数据格式,不会导入数据,正式使用的时候去掉--debug2.8.3. Import item data

Assuming that an Oracle database describes the following item data (refer to examples/events.plsql):

drop table if exists events; create table events ( user_id varchar(100), action varchar(100), time timestamp, item_id int, item_name text, item_cate varchar(100)); insert into events values('bug29', 'view', '2018-05-12 13:01:11', 13245, '男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲', '男装'); insert into events values('bug29', 'buy', '2018-05-12 13:05:03', 13245, '男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲', '男装'); insert into events values('小武', 'view', '2018-05-13 10:20:32', 23421, 'New Order Technique 2CD豪华版 欧版行货 全新未拆', '音像'); insert into events values('菠菜', 'view', '2018-05-13 20:42:53', 3442, 'NUK安抚奶嘴宝宝防胀气安慰奶嘴乳胶迪士尼安睡型', '母婴');Import these data into the Sensors Analytics system, using the item_id column in the database as the item_id of the item data, the action column as the item_type of the item data, and only import item_cate and item_name as event properties. The final ORDER BY is mainly to ensure consistent order of multiple data queries. If importing fails halfway, you can skip importing successful rows by configuring --skip_cnt:

python3 format_importer.py oracle_item \ --url 'http://localhost:8106/sa?project=xxx' \ --item_id 'item_id' \ --item_type 'action' \ --user 'root' \ --password 'pass' \ --dsn '127.0.0.1/orcl' \ --sql 'select ITEM_ID as "item_id", ACTION as "action", ITEM_CATE as "item_cate", ITEM_NAME as "item_name" from events order by time' \ --case_sensitive true --debug # 校验数据格式,不会导入数据,正式使用的时候去掉--debug2.8.4. Import user relationships

Note: Importing user relationships requires [g20]v1.13.5[/g20] or above

Assuming there is an Oracle database that describes the following user associations (refer to the code package examples/signup.plsql):

create table users ( user_id varchar2(100), device_id varchar(200)); insert into users (user_id, device_id) values('bug29', '0c0c93f5-c747-4c1a-acfc-e75279720da1'); insert into users (user_id, device_id) values('小武', 'ac0eadfb-cd5d-44b6-8a21-079862773c11'); insert into users (user_id, device_id) values('菠菜', '2903f1d4-e20d-4866-8614-66d9101a3bd3'); commit;To import data into the Sensors Analytics system, use --login_id_from and --anonymous_id_from to specify the login ID and anonymous ID of the user association. The ORDER BY at the end is mainly to ensure that the order of querying data is consistent. This way, if the import fails halfway, you can skip the number of successfully imported rows by configuring --skip_cnt:

python3 format_importer.py oracle_item \ --url 'http://localhost:8106/sa?project=xxx' \ --login_id_from 'USER_ID' \ --anonymous_id_from 'DEVICE_ID' \ --user 'root' \ --password 'pass' \ --dsn '127.0.0.1/orcl' \ --sql 'select USER_ID as "user_id",DEVICE_ID as "device_id" from users order by user_id' \ --case_sensitive true --debug # 校验数据格式,不会导入数据,正式使用的时候去掉--debug2.9. Import from configuration file

FormatImporter supports reading parameters from a configuration file. The method is to add @<config file path> after the sub-command. The downloaded source code package includes several default configurations in the conf directory, which can be modified before use.

Taking 2.2.1 as an example, we can write the import parameters to the configuration file first (refer to the code package conf/csv_event.conf):

url: http://localhost:8106/sa distinct_id_from: user_id is_login event_from: action timestamp_from: time filename: ./examples/events.csv property_list: item_id,item_name debug # 校验数据格式,不会导入数据,正式使用的时候去掉 --debugThen execute:

python3 format_importer.py csv_event @./conf/csv_event.confThis method can be used to import other types of data as well.

2.10. Notes

- Before importing data into Sensors Analytics, please confirm whether the distinct_id in the data is the login ID or anonymous ID. If it is a login ID, you need to add the --is_login parameter to the import command.

- Select a subset of data first, and test with the --debug option before formally importing. With the --debug option, it enters debugging mode and does not actually import data. For each piece of data, if it is successful, the sent data will be printed to standard output; otherwise, an error message will be printed. After execution, it prints how many records were read and how many errors occurred.

- When importing CSV/Nginx logs, you can first use the head -1000 file_name > test_file method to import a part of the data into the test file, and then import it using the test file.

- When importing MySQL data, you can add LIMIT 1000 after the query statement and test the import.

- During runtime, a log will be generated in the format_importer directory, with the name format_importer.log, which contains more complete debugging information than the output. If there is too much output on the screen after adding --debug, you can check the log for error information and debugging information.

- Due to the complexity of the parameters, it is recommended to use the configuration file to pass the parameters. You can check the conf directory after decompressing for specific configuration file examples.

- For CSV log import, it is necessary to ensure that the log file is a valid CSV format. It is recommended to read the 【How to escape CSV】 related content in the common questions of this article.

- Due to the limitations of Nginx log format, the property names imported may not be easily readable, such as: __request_param_action, etc. It is highly recommended to use property_list_cnames to convert them into more readable property names.

- For MySQL import, if the SQL statement is long, it is prone to shell escape errors after passing it to the program. It is recommended to check the log in the format_impoter directory when an error occurs. The first log after startup will write the passed parameters, please carefully check whether they match the passed SQL. In addition, when the SQL statement is long, it is recommended to use the --filename option to write the SQL statement into a file and pass it.

- For MySQL imports, if the SQL statement is designed to join two tables, then you need to use an alias or column name when specifying the column name, rather than < Table name > .< List > . For details, see the FAQ of this article [Import two tables by joining them with MySQL. Why do I get a message indicating that distinct_id/timestamp/event is empty when there is data?] Related content.

- If you want to improve the import speed, it is recommended to generate a log file using --output_file first, and then use BatchImporter or HDFSImporter to import the data.

3. Usage details

3.1. Subcommand Explanation

A subcommand is the first parameter after executing a script, such as executing in 2.1

python3 format_importer.py csv_event \ --url 'http://localhost:8106/sa?project=xxx' \ --event_default 'UserBuy' \ --distinct_id_from 'user' \ --timestamp_from 'buy_time' \ --filename 'buy.csv'The subcommand used is csv_event, which means converting CSV format file into event import. Currently, there are 17 subcommands supported:

| Subcommand Name | Explanation |

|---|---|

| csv_profile | Converts CSV format file into user attribute import |

| csv_event | Converts CSV format file into event import |

| csv_item | Converts CSV format file into item data import |

| csv_signup | Converts CSV format file into user association import |

| mysql_profile | Converts data from MySQL database into user attribute import |

| mysql_event | Converts data from MySQL database into event import |

| mysql_item | Converts data from MySQL database into item data import |

| mysql_signup | Import user relationship from MySQL database |

| nginx_profile | Import user attributes from Nginx logs |

| nginx_event | Import events from Nginx logs |

| nginx_item | Import item data from Nginx logs |

| nginx_signup | Import user relationship from Nginx logs |

| json | Import JSON logs, note that logs are not differentiated as event, profile, item, or signup. |

| oracle_profile | Import user attributes from Oracle database |

| oracle_event | Import events from Oracle database |

| oracle_item | Import item data from Oracle database |

| oracle_signup | Import user relationship from Oracle database |

If you want to see which parameters are supported by a specific subcommand, you can add -h after the subcommand to get all the parameters and descriptions, for example:

python3 format_importer.py csv_event -h python3 format_importer.py json -h3.2. Public Parameters

Common public parameters include:

| Parameter Name | Alias | Required | Description |

|---|---|---|---|

| --url | -l | Either -l or output_file is required | Data Receiving URL |

| --output_file | -O | Either -O or url is required | The output file name, where each line is a JSON in the specified format. |

| --project | -j | No | The specified project name, default is "default" |

| --skip_cnt | -c | No | Ignore the first run, if the run fails, you can use this configuration to specify to skip the first few lines |

| --debug | -D | No | If specified, it means using debug mode, no data will be imported, only data will be displayed in stdout, see Debug Mode for details |

| --quit_on_error | -Q | No | If selected, the process will exit with a single error log |

| -- log_level | -lv | No | The minimum log output level, default is DEBUG |

In addition, when importing data from CSV tables, Nginx logs, MySQL databases, or Oracle databases, it is necessary to distinguish whether to import event, profile, item, or signup data, as they have different common parameters. When importing JSON logs, only the above common parameters can be set.

3.3. Common parameters for sub-commands

3.3.1. Sub-commands related to events

| Parameter name | Alias | Optional/Required | Explanation |

|---|---|---|---|

| --distinct_id_from | -df | Required | Specify a column as distinct_id |

| --is_login | Optional | Whether the distinct_id is a login ID. The default is no | |

| --event_from | -ef | Either event_from or event_default is required | Specify a column as event name |

| --event_default | -ed | Either event_from or event_default is required | Specify a fixed string as event name |

| --timestamp_from | -tf | Either timestamp_from or timestamp_default is required | Specify column as time |

| --timestamp_default | -td | Choose either timestamp_default or timestamp_from, one is required | Specify fixed time string as time |

| --timestamp_format | -tf | Optional | Used together with timestamp_from to specify the time format. The default format is %Y-%m-%d %H:%M:%S |

3.3.2. profile related subcommands

| Parameter Name | Alias | Optional/Required | Explanation |

|---|---|---|---|

| --distinct_id_from | -df | Required | Specify column as distinct_id |

| --is_login | Optional | Whether distinct_id is login ID, default is no |

3.3.3. Subcommand related to item

| Parameter name | Alias | Optional/Required | Explanation |

|---|---|---|---|

| --item_type | Required | Specify a column as item_type | |

| --item_id | Required | Specify a column as item_id |

3.3.4. Subcommand related to signup

| Parameter name | Alias | Optional/Required | Explanation |

|---|---|---|---|

| --login_id_from | Required | Specify the column as login ID. | |

| --anonymous_id_from | Required | Specify the column as anonymous ID. |

3.4. Import other parameters in CSV format.

| Parameter Name | Alias | Required | Explanation |

|---|---|---|---|

| --filename | -f | Yes | CSV file path. |

| --property_list | -pl | No | Comma-separated selected properties. For example, -p name,time will import columns name and time as properties. If not specified, all columns will be imported as properties. |

| --skip_identify | -i | No | Columns specified here will not be automatically identified as their respective data types. For example, when configuring --skip_identify name,id, columns name and id will be imported as strings without type inference. If not specified, all selected columns will be automatically identified with data types. |

| --ignore_value | No | Specify certain values as empty. For example, when specifying --ignore_value null ", all occurrences of "null" will be treated as empty values. | |

| --csv_delimiter | No | The column delimiter for CSV files. The default value is ','. It accepts only single characters as parameters, or \ + ASCII code. For example, \9 represents \t. | |

| --csv_quotechar | No | The quote character for CSV files, which is used to specify the start and end of CSV strings. The default value is '"' It accepts only single characters as parameters, or \ + ASCII code. For example, \9 represents \t. | |

| --csv_prefetch_lines | No | CSV file read preview lines, which is used to determine the column types. The default value is -1, which means to read the entire file. Note that if the data distribution is uneven (e.g., some fields are missing in the first few rows but present in the later rows), do not add this parameter. | |

| --file_encoding | No | Set the encoding format of the CSV file. The default value is utf-8. | |

| --list_type | No | Specify an attribute as a list. Use comma to separate the selected attributes, for example, --list_type list_a, list_b will import list_a and list_b columns as list format. list_type:list_a, list_b A list format data needs to be separated by |, for example: 1|2|3 |

3.5. Other parameters for importing Nginx logs

| Parameter Name | Alias | Required | Description |

|---|---|---|---|

| --filename | -f | Yes | Path of the Nginx log file. |

| --log_format | -F | yes | Nginx log configuration, similar to '"$ remote_addr" "$ time_local" "$ http_refer" "$ status"'. |

| --property_list | -pl | yes | A comma-separated list of selected properties, for example, --property_list http_refer, status will import the http_refer and status columns as properties. |

| --skip_identify | -i | no | The corresponding columns will not be automatically identified as data types. For example, --skip_identify request_user, status will import request_user and status as strings without type identification. If not specified, all selected columns will be automatically identified as data types. |

| --url_fields | -uf | no | The corresponding columns will be parsed as URLs, separated by commas. After parsing, properties with names like __<field_name>_<parsing_content> will be generated, including netloc, path, query, param_<parameter_name>. For example, for $my_url field value http://www.abc.com/path/to/mine?k1=v1&k2=2 , it will be parsed as {"__my_url_netloc": "www.abc.com","__my_url_path": "/path/to/mine", "__my_url_query":"k1=v1&k2=v", "__my_url_param_k1": "v1","__my_url_param_k2":2}. Note that these fields can be configured in the property_list. The default is "http_referer". |

| --filter_path | -fp | no | Filter the corresponding paths, multiple selections are allowed. The path here refers to the $request path and supports regular expressions. For example --filter_path '.*\.gif' --filter_path '/index\.html' will filter requests for gif and index. |

| --ip_from | -if | No | Only valid for events. Which field is used as the IP address. If specified, the IP address for each data entry is the value of the corresponding field. The default value is $remote_addr. |

| --ignore_value | No | Specify some values to be treated as empty, such as specifying --ignore_value null then all nulls are regarded as empty values. | |

| --property_list_cnames | No | Comma-separated property corresponding names, which need to correspond to --property_list one by one. |

3.6. Other parameters for importing MySQL data

| Parameter Name | Alias | Required | Explanation |

|---|---|---|---|

| --user | -u | Yes | The username required to connect to the MySQL database. |

| --password | -p | Yes | The password required to connect to the MySQL database. |

| --host | -i | Yes | The address required to connect to the MySQL database. |

| --port | -P | Yes | The port required to connect to the MySQL database. |

| --db | -d | Yes | The name of the corresponding MySQL database, only one can be specified at a time. |

| --sql | -q | Either --sql or filename is required. | Query statement, it is recommended to add order by and other methods to ensure consistent order of multiple query results. |

| --filename | -f | Either sql or filename is required. | File path of the query statement, it is recommended to add order by and other methods to ensure consistent order of multiple query results. |

| --bool_property_list | -bp | No | Comma-separated list of boolean type properties, the corresponding property values of 1 will be converted to true, and 0 will be converted to false. |

| --case_sensitive | -cs | No | Whether the imported property names are case sensitive, note that if case insensitive, they will all be converted to uppercase. The default is true. |

3.7. Other parameters for importing JSON logs

| Parameter name | Alias | Required | Explanation |

|---|---|---|---|

| --path | -p | Yes | Path of the log file/directory |

Note when importing JSON logs, if the log directory is passed, it will traverse all the files in the next level of the directory and import them in alphabetical order. This parameter does not support nested directories.

3.8. Other parameters for importing Oracle data

| Parameter Name | Alias | Required | Explanation |

|---|---|---|---|

| --user | -u | Yes | Username required to connect to the Oracle database. |

| --password | -p | Yes | Password required to connect to the Oracle database. |

| --dsn | -dsn | Yes | DSN required to connect to the Oracle database. |

| --sql | --q | Either sql or filename is required | Query statement, it is recommended to add order by to ensure the order consistency of multiple query results |

| --filename | --f | Either sql or filename is required | Path of the file containing the query statement, it is recommended to add order by to ensure the order consistency of multiple query results |

| --bool_property_list | --bp | No | Comma-separated list of bool type attributes. The corresponding attribute values of 1 will be converted to true, and 0 will be converted to false |

| --case_sensitive | --cs | No | Whether the imported attribute names are case-sensitive. Note that if case-insensitive, they will all be converted to uppercase. The default is false |

4. Frequently Asked Questions

1. Can CSV headers with Chinese characters be supported?

According to the explanation in the data format section, the property names cannot contain Chinese characters. However, you can set a display name for the property in Sensors Analytics by configuring --add_cname. For example, the format of buy.csv is as follows:

用户名,购买时间,商品id,商品名称,商品类别 小明,2015-01-20 10:35:22,13579,真皮帽子 男士护耳保暖鸭舌皮帽平顶八角帽头层牛皮帽子时尚休闲,男装 小芳,2015-07-13 23:12:03,24680,官方正品ZINO 3D透亮无瑕BB霜SPF30PA++ 防晒遮瑕美白 小样 3ml,护肤 小武,2015-04-03 20:30:01,31415,New Order Technique 2CD豪华版 欧版行货 全新未拆,音像The import parameters are as follows:

python3 format_importer.py csv_event \ --url 'http://localhost:8106/sa?project=xxx' \ --event_default 'UserBuy' \ --distinct_id_from '用户名' \ --is_login \ --timestamp_from '购买时间' \ --filename 'buy.csv' \ --add_cname \ --web_url 'http://localhost:8107' \ --username admin \ --password passwordNote that different platforms have different encoding requirements, so make sure the default encoding matches the file encoding. Please refer to the instructions for using Windows for specific details.

The web_url and url are basically the same, except for the port number. Refer to the previous content for the method of obtaining the url.

2. How to configure Nginx to filter static files?

If the Nginx log contains requests for gif, css, and js files that need to be filtered, you can use --filter_path to filter them out.

python3 format_importer.py nginx_event \ --filter_path '.*\.gif' \ --filter_path '.*\.css' \ --filter_path '.*\.js' \ # 其他参数。。。3. What should I do if an error occurs during the import?

When a parsing error occurs, the import tool will print the error reason and the line number of the error directly in the terminal, and then continue processing by discarding the erroneous data. The printed log is similar to:

2015-10-28 14:58:52,020 808 WARNING failed to parse line 12 2015-10-28 14:58:52,021 809 WARNING Traceback (most recent call last): File "format_importer.py", line 804, in main sa.track(distinct_id, event, properties) File "/Users/padme/git/sa2/tools/format_importer/sensorsanalytics/sdk.py", line 118, in track data = self._normalize_data(data) File "/Users/padme/git/sa2/tools/format_importer/sensorsanalytics/sdk.py", line 149, in _normalize_data raise SensorsAnalyticsIllegalDataException("property [distinct_id] must not be empty") sensorsanalytics.sdk.SensorsAnalyticsIllegalDataException: property [distinct_id] must not be emptyAt the end of the run, it will print the number of lines read (total_read), written (total_write), error (error), and skipped (skip), similar to:

2015-10-28 14:58:52,023 618 INFO end import nginx 2015-10-28 14:58:52,024 838 INFO counter = {'error': 3, 'skip': 0, 'total': 300, 'total_read': 100, 'total_write': 97}.If you want to be prompted when an error occurs, you can add the option --quit_on_error. In this case, the error log will be as follows:

2015-10-28 14:58:29,499 808 WARNING failed to parse line 12 2015-10-28 14:58:29,502 809 WARNING Traceback (most recent call last): File "format_importer.py", line 804, in main sa.track(distinct_id, event, properties) File "/Users/padme/git/sa2/tools/format_importer/sensorsanalytics/sdk.py", line 118, in track data = self._normalize_data(data) File "/Users/padme/git/sa2/tools/format_importer/sensorsanalytics/sdk.py", line 149, in _normalize_data raise SensorsAnalyticsIllegalDataException("property [distinct_id] must not be empty") sensorsanalytics.sdk.SensorsAnalyticsIllegalDataException: property [distinct_id] must not be empty 2015-10-28 14:58:29,502 618 INFO end import nginx 2015-10-28 14:58:29,502 835 ERROR failed to import, please fix it and run with[--skip_cnt 11] again!Note the prompt below, which indicates that 11 lines have been successfully imported. After fixing the data in the 12th line, add the parameter --skip_cnt 11 to the previous command.

It is important to note that for MySQL, in order to prevent irreversible data errors, please ensure that querying the SQL multiple times will yield consistent results, i.e.:

- No new data is being written, for example, by adding WHERE to ensure only historical data is imported.

- Adding sorting options to the query result to ensure consistent order, for example, by adding ORDER BY.

4. When using MySQL to join two tables and import them, why am I getting the error 'distinct_id / timestamp / event is empty' even though there is data?

Note that if the SQL involves joining two tables, the column names must be unique or aliases must be used. The column names here represent the parameters for distinct_id_from, timestamp_from, and event_from, as well as the names of the imported properties.

For example, the SQL is as follows:

SELECT a.uid, a.event, a.time, b.property1, b.property2 FROM a JOIN b ON a.action_id = b.action_id

The running parameters need to be specified as:

--distinct_id_from 'uid' \ --timestamp_from 'time' \ --event_from 'event'

The imported property names are (property1, property2) instead of (b.property1, b.property2).

If the column names are not unique, another way is to use aliases. For example, the SQL statement is as follows:

SELECT a.u AS uid, a.e AS event, a.t AS time, b.property AS property1, a.property AS property2 FROM a JOIN b ON a.action_id = b.action_id

The specified parameters to run are:

--distinct_id_from 'uid' \ --timestamp_from 'time' \ --event_from 'event'

The imported property names are (property1, property2).

5. How to convert a value to a text/numeric format when importing using MySQL?

MySQL has a CAST function to support type conversion.

For example, if there is a column named property1 of type int and a column named property2 of type varchar(10) in MySQL, you can use the following SQL statement:

SELECT CAST(property1 AS CHAR(10)) AS property1, CAST(property2 AS SIGNED) AS property2 FROM test_table;

This converts the value of property1 to a text of length 10 and the value of property2 to numeric.

6. How to import data for other projects?

If the project is not specified, it will be imported to the default project "default".

You can specify the project name in the --url parameter as project=<project_name>, or use the --project parameter. If both are specified, the --project parameter will be used.

Note that if the value of --url is obtained by copying the data receiving URL in "Account" -> "Data Import" -> "Copy Data Receiving URL", the copied URL already includes the project parameter and no additional specification is required.

In addition, if importing JSON logs, you can add "project":"project_name" in the JSON to import the data to multiple projects with one data source. The project field in the log takes priority over the parameter.

7. How to escape CSV?

CSV is a comma-separated file format. If a column's content contains a comma, it needs to be enclosed in double quotes, otherwise FormatImporter will throw an error. For example, the content of a CSV file is as follows:

col1,col2,col3 a,b,c,d

An error will occur at runtime:

csv error near line 1: content has 1 more fields than header

The correct approach is to add double quotes:

col1,col2,col3 a,"b,c",d

It will be recognized as:

| col1 | col2 | col3 |

| a | b,c | d |

Note that double quotes can span across lines. For example, the content of a CSV file is as follows:

col1,col2,col3 a,"b,c d,e",f

It will be recognized as:

| col1 | col2 | col3 |

| a | b,c,d,e | f |

Therefore, if a column starts with double quotation marks, CSV will keep looking for the next double quotation mark as the end. If there are too many characters between two double quotation marks, an error will occur:

_csv.Error: field larger than field limit (131072)

There are two solutions. One is to replace the default string boundary symbol of CSV with the --csv_quotechar parameter, ensuring that this symbol has not appeared before. The other is to escape the double quotation mark by turning one double quotation mark into two, and to enclose this column with double quotation marks. For example, the content of the CSV file is as follows:

col1,col2,col3 a,"""b",c

It will be recognized as:

| col1 | col2 | col3 |

| a | "b | c |

8. Instructions for using on Windows

When using FormatImporter on Windows, due to the differences in terminal syntax and default encoding, there are a few things to note:

1. It is recommended to pass parameters through configuration files rather than command lines to avoid command escaping. Note that the commands in the above examples are based on Unix operating systems. If you run them directly on Windows, syntax errors or escapes may occur.

2. CSV files default to utf-8 encoding. If there are encoding errors, you can first check the encoding format of the file, and then use the --file_encoding command to set the encoding format.

3. If you need to access MySQL/Oracle under Windows, special processing needs to be done for encoding. Please consult the support team of Sensors Data for specific handling methods.

9. Import Limitations

When importing data, there is no data volume limit for the FormatImporter import itself. However, if the data being imported is large, the import speed will be slower, approximately 500-700 rows per minute. The import speed is also dependent on the stability of the network. If the import times out, you can try using the internal network data receiving URL.

Note: The content of this document is a technical document that provides details on how to use the Sensors product and does not include sales terms; the specific content of enterprise procurement products and technical services shall be subject to the commercial procurement contract.

Popular Searches

Popular Searches