LogAgent

|

Collect

Before using, please read the Data Model and Data Format introduction.

Click here to download the latest version of LogAgent

1. Overview

LogAgent is a tool used to import backend data into Sensors Analytics. It generally runs on servers that generate production logs.

Here are a few examples:

- My program cannot conveniently embed Sensors Analytics' SDK, but I want to import the data output by the program into Sensors Analytics;

- I want to generate import data for Sensors Analytics locally and keep a complete copy locally;

- I don't want to control the data sending progress myself, but I want to send the data without duplication or omission;

In these cases, you can consider using LogAgent for data import.

LogAgent does a very simple job, which is to follow the specified rules in the tail directory:

- If new data is found, it will perform a simple format validation, package the data, and send it to Sensors Analytics;

- If no new data is found, it will wait for a period of time and then check for new data again;

When LogAgent starts, it will ask Sensors Analytics about the progress of sending local directory files to achieve breakpoint resume.

2. Usage

2.1 Operating Environment

LogAgent supports deployment in both Linux and Windows environments. When deploying in a Windows environment, attention should be paid to file encoding issues. For specific considerations, please refer to Section 3.6 of this article.

2.2 Usage

In the following example, we use the Linux environment to explain the usage steps of LogAgent.

1. Download and unzip LogAgent deployment package:

wget LogAgent网络包链接(具体地址见上面链接) tar xvf log_agent-XXXX.tar cd log_agent2. Check the runtime environment (at least Java 8 or above):

bin/check-javaIf prompted with "Can't find java", please specify the JAVA_HOME environment variable or use the provided script (only applicable to Linux environments) to install the green JRE environment (automatically unzipped into the LogAgent installation directory without affecting other Java environments on the system):

bin/install-jre3. Edit the configuration file:

Edit the logagent.conf file in the LogAgent installation package directly, refer to the LogAgent scenario usage example and configure each option in logagent.conf according to the instructions;

4. Check the configuration file:

bin/logagent --show_confOutput similar to the following:

17/09/22 15:16:27 INFO logagent.LogAgentMain: LogAgent version: SensorsAnalytics LogAgent_20170922 17/09/22 15:16:27 INFO logagent.LogAgentMain: Use FQDN as LogAgentID 17/09/22 15:16:27 INFO logagent.LogAgentMain: LogAgent ID: test01.sa 17/09/22 15:16:27 INFO logagent.LogAgentMain: Source config, path: '/home/work/data', pattern: 'service_log.*' 17/09/22 15:16:27 INFO logagent.LogAgentMain: Service Uri: http://10.10.90.131:8106/log_agent 17/09/22 15:16:27 INFO logagent.LogAgentMain: Sender Type: BatchSender 17/09/22 15:16:27 INFO logagent.LogAgentMain: Pid file: /home/work/logagent/logagent.pidThe latest version of LogAgent simplifies the configuration file check and may output less logs when not paying attention to the configuration check. Future versions will add more logs. The output result is as follows.

5. Start LogAgent:

Execute the following command to run LogAgent in the background:

nohup bin/logagent >/dev/null 2>&1 &In the new version, you can directly use

bin/start6. Stop LogAgent:

Execute the following command in the LogAgent directory to kill the process:

kill $(cat logagent.pid)The pid file in Step 4 appears. (Step 4 Output the last line of the examplePid file)it indicates that the loading and configuration are successfully started.

If the system fails to start, you can check the log in the log directory to rectify the problem, or directly start the LogAgent on the front-end to check whether any error is generated.

bin/logagentThe new version of LogAgent can be used directly

bin/stop7. Start multiple LogAgents on one machine:

A LogAgent program directory can start only one LogAgent process instance. If you need to start multiple LogAgents, deploy them in different directories and start them separately.

8.Using LogAgent to verify data:

When verifying data formats and field types, you can use the DebugSender of LogAgent. LogAgent verifies data as follows:

- LogAgent Specifies whether the local verification data format is valid, such as JSON and required fields(distinct_id .etc.)is exist etc.

- Send the data that passes the internal verification to the remote end to verify the data content, such as the field type, field type, and field type.track_signup .etc.

If the verification fails, the data and the cause of the error will be printed in the log, if the configuration file is set debug_exit_with_bad_record=true, then the LogAgent will stop processing the following data.

The verified data can be directly imported into the wizard analysis or through configuration items debug_not_import=true Set this data not to be imported.

- Start the LogAgent to verify data. The data that passes the verification is imported to the system. Error logs are generated when the verification fails.

sender=DebugSender- Start the LogAgent to verify data and verify the passed data do not import system, logAgent exits when it encounters error data:

sender=DebugSender debug_exit_with_bad_record=true debug_not_import=true

Parameter interpretation:

- debug_exit_with_bad_record:When this parameter is added, if a piece of faulty data is encountered, the LogAgent displays the error data and the cause, and exits.

- debug_not_import:When this parameter is added, the verified data will not be written into the analysis system.

Note: The verification data is generally used to check whether the format and field type of a small amount of data are correct, and is not applicable to the production environment with a large amount of data.

2.3 Matters needing attention

- When the data address is load balancing, ensure that the LogAgent request can always land on a certain data receiver and do not change randomly.

- When the server has data delay, do not change the data receiving address at will. For other details, please contact Shenze technical support.

3. Q and A.

3.1 File read sequence

The list of files read by LogAgent consists of files in the path directory that match pattern and files specified by real_time_file_name (if any). Other files will be ignored, which means real_time_file_name will need to match the pattern after it is renamed.

LogAgent matches all files whose rules match pattern in the specified directory Sort in lexicographical order, Read in sequence;

real_time_file_name (if configured) is always placed at the end of the file list, indicating that it is the latest file;

3.2 File Reading Process

Suppose there are the following files in directory path:

service.log.20160817 service.log.20160818 service.log.20160819- When a file such as service.log.20160818 reaches the end, refresh the directory file list. If the currently reading file service.log.20160818 is not the last file in the file list, it is assumed that there is another file at the next time point, such as service.log.20160819. Therefore, mark the current file service.log.20160818 as finished (i.e., this file is no longer being written to).

- Read the current file again. If there is still no data read, close the current file service.log.20160818 and start reading from the beginning of the next time file service.log.20160819.

- Note: When switching to read service.log.20160819, any data in dictionary order that comes before service.log.20160819 will not be read again.

3.3 Get Reading Progress

Use the file-list-tool to obtain the current reading progress, the list of files already read, and the list of files not yet read.

| Functionality | Script Path | Execution Statement |

|---|---|---|

| Obtain Progress | bin/file-list-tool |

CODE

|

| Get List of Files Already Read | bin/file-list-tool |

CODE

|

| Get List of Files Not Yet Read | bin/file-list-tool |

CODE

|

3.4 Troubleshooting when data is not sent

There can be multiple reasons why data is not being read and sent correctly. Please follow the steps below to troubleshoot:

1. Is there any error information in the logagent.log file in the log directory under the LogAgent directory? The error could be related to data format or network issues. The problematic data will be filtered by LogAgent and not imported. It will be recorded in the invalid_records file in the log directory.

2. Does the file to be read match the configured pattern? Files that do not match the pattern will not be scanned by LogAgent.

3. Is the file to be read located before the current progress in the file list? For example, if there are the following files in the current directory:

data.2016090120 data.2016090121a. Assuming the current LogAgent is reading data.2016090121.

b. A new file data.20160901 is added, which will not be read because when sorting files in the directory in lexicographical order, data.20160901 comes before data.2016090121;

c. If data is appended after data.2016090120, it will not be read as LogAgent does not read data from files before the currently reading file data.2016090121.

4. Are multiple machines using the same LogAgent ID? This issue is typically indicated by the Received>Verified value in the Tracking Management. LogAgent ID is used for deduplication of data on the server side. If the LogAgent ID is not specified in the configuration file, the fqdn is used as the default LogAgent ID. In this case, check if the fqdn is the same.

5. Deleting or truncating the file being read. Do not delete or truncate the file that LogAgent is currently reading. LogAgent progress refers to the current file being read and the position within the file. If this file is deleted, LogAgent will not know which file to read or from which position. Please refer to the LogAgent log for instructions on how to handle this situation.

The steps for the old version are as follows:

a. Stop LogAgent;

b. Move the files that have been read from the data directory to another directory;

c. Modify the configuration file to use the new logagent_id;

d. Delete the logagent.pid.context file;

e. Start LogAgent;

The steps in step c will make the newly started LogAgent start from the beginning, sending all files. Step b is to prevent duplicated data sending from starting all over again.

For the new version, you can directly use the command for one-click exception handling:

bin/abnormal-reset --context_file ./logagent.pid.context //在新版本的 log_agent 目录下执行以上命令After resetting, use the bin/start command to restart and continue running!

6. The data file being sent cannot be sent after being edited with tools like "Notepad". Text editing tools like "Notepad" may delete or truncate the old file and write a new one when saving. This can cause issues mentioned in step 4: deleting or truncating the file being read.

7. There is no obvious error information in the LogAgent log, and the server cannot find the corresponding data. LogAgent only checks if the data meets the basic format requirements. Other detailed checks, such as data field types, are performed in the server module. Please check the Tracking Management of the imported project for error information.

3.5 Checking the LogAgent running status

LogAgent outputs logs in the following format every 10 seconds:

LogAgent send speed: 1.200 records/sec, reading file: /home/work/app/logs/service.log.2016-08-17 (key: (dev=fc01,ino=1197810), offset: 122071 / 145071).Explanation of the values in the example:

- send speed: 1.200 records/sec: average speed calculated based on the previous 10 seconds' processing;

- reading file: /home/work/app/logs/service.log.2016-08-17: path of the file being read;

- key: (dev=fc01,ino=1197810): disk and inode where the file is located;

- offset: 122071 / 145071: the first value is the position of the file being read, the second value is the total size of the file being read.

3.6 Using LogAgent on Windows

Real_time_file_name parameter is not supported on Windows, so real-time file renaming cannot be tracked;

Requires Java 8 runtime environment;

If the configuration file has only one line, try opening it with "WordPad" (not Notepad) or notepad++;

For Windows, pay attention to escaping issues when configuring the path, such as path:

D:\data\logsThen it needs to be set in the configuration file:

path=D:\\data\\logsOther usage is the same as described in this document, first fill in the configuration file, then run bin/logagent.bat to start LogAgent.

3.7 Upgrading LogAgent

The steps are as follows:

- Close the running LogAgent, you can kill the process to exit LogAgent;

- Download the new version and unzip it;

- Replace the bin directory and lib directory under the previous LogAgent deployment directory;

- Start LogAgent with the same parameters and configuration file as before;

In addition:

If you upgrade from a version before 20171028 to the new version, you need to add the "pid_file" item to the old configuration file (the new configuration file already includes it).

# pid 文件路径, 若指定则生成 pid 文件, 若运行发现 pid 文件已经存在, 则 LogAgent 启动失败 pid_file=logagent.pidCODE

3.8 Breakpoint resume

The LogAgent consists of the triplet (LogAgent ID, path, pattern) which corresponds to a data reading progress (which file inode at which offset position).

If the reading progress cannot find the resumption point (for example, cannot find the file based on the inode, or offset > file length), it will cause the LogAgent to fail to start and the process will exit. At this time, you can clear the progress according to the prompt in the LogAgent log and start a new import.

3.9 Log cleaning

Production log cleaning: You can configure parameters such as "operate_read_files_type" (processing type), "operate_read_files_before_days" (processing rules), and "operate_read_files_cron" (processing frequency) in logagent.conf.

It is recommended to choose compression. Set the log regular deletion cautiously. If the log is cleared due to incorrect configuration parameters, you will be responsible for it.

Running log cleaning: The running log is located in the log folder of LogAgent and takes up relatively little storage. You can also consider deleting it.

Deletion method:

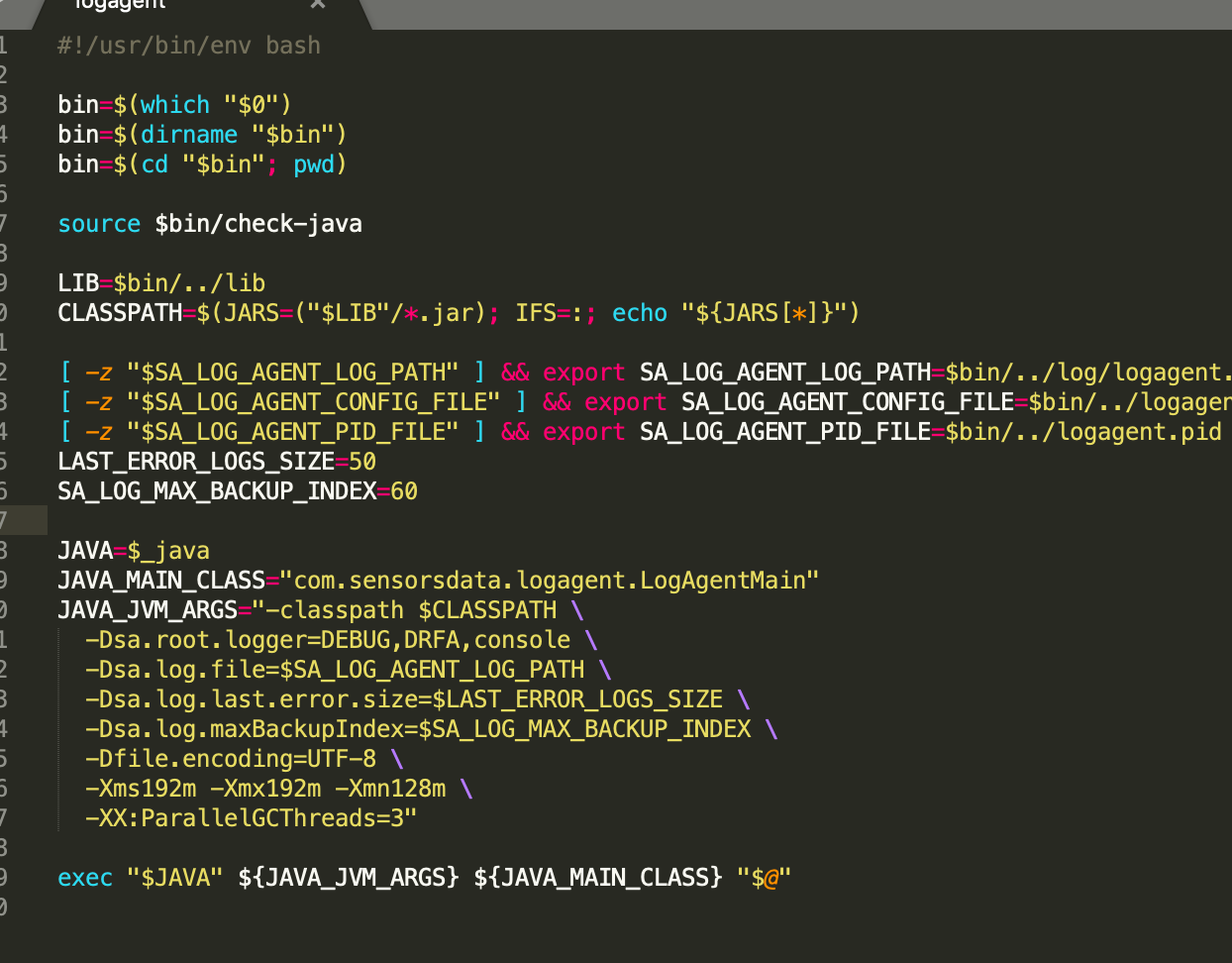

1. Find the bin/logagent file in the logagent directory and open it with a file editor.

2. Modify the SA_LOG_MAX_BACKUP_INDEX parameter, which is set to clean up every 60 days by default but can be modified as needed.

Note: The content of this document is a technical document that provides details on how to use the Sensors product and does not include sales terms; the specific content of enterprise procurement products and technical services shall be subject to the commercial procurement contract.

Popular Searches

Popular Searches